Technology changes rapidly and PC hardware is no exception to that rule. Throughout the 90's and early 2000's we saw some pretty ridiculous advances in computing power, going from single-core CPUs in the 100-200MHz range to the 4/6/8 core 3-4GHz monsters of today.

After deciding that our family's relic from 1999 just wouldn't cut it any more, I built my first computer in 2002 and traded up from a P3 450MHz with PC133 SDRAM to an Athlon XP 2100+ at 1.73GHz and 512MB of DDR. The old machine originally had a Voodoo Banshee 16MB video card, which was apparently "the ultimate bridge between 3D entertainment and PC graphics." I had previously swapped out that card for a GeForce 2 Ti and I further upgraded to a GeForce 4 Ti 4200 128MB with my new build. In a span of three years I moved to a new CPU architecture with more than triple the clock speed of the Pentium III, adopted the first generation of DDR, and picked up a video card with eight times the VRAM and many times the performance of my Banshee. Pretty crazy.

|

| The beautiful Voodoo Banshee. Image: Maximum PC |

Examining the Pace of Innovation

The trend has continued in years since, but a couple of articles from this week have me wondering if things might be slowing down. ExtremeTech published an article titled "PC obsolescence is obsolete" on Tuesday that compares the jump in tech from 1996 to 2000 and contrasts it with the advances we've seen from 2008 to 2012. Here's what they had to say about the first four-year span:

"In four years, CPU clockspeed quintupled. Actual performance gains were even higher, thanks to further improvements in CPU efficiency. In 1996, high-performing EDO RAM offered up to 264MB/s of bandwidth, in 2000, PC100 had hit 800MB/s. AGP slots weren’t available in 1996, four years later they were an essential component of an enthusiast PC. USB controllers had gone from dodgy, barely functional schlock that locked up the mouse every time you launched a program, to reasonably reliable. 32MB of RAM was great in 1996, by 2000 you wanted at least 64MB for acceptable performance."

Their current-day performance comparison includes a 2008-era Nephalem i7 system and an Ivy Bridge system of today. Improvements in clock speed and architecture aside, they argue that everything is "roughly comparable:" tri-channel 6GB DDR3-1066 kits can still hang with dual-channel 8GB DDR3-1600, and add-in cards can provide things like USB 3.0 ports or SATAIII for SSDs.

I'm not sure I agree with their choice of an expensive enthusiast LGA1366 setup from Q4 2008 as the choice of tech from several years ago - the 65nm or 45nm LGA775 Core 2 Quad CPUs from 2007/2008 and are probably more in line budget-wise with an LGA1155 Ivy Bridge system. Looking back at the Tom's Hardware "System Builder Marathon" series, their June 2008 $1000 system featured a C2D E7200, 2GB of DDR2, and two 8800GT in SLI. This week's $1000 build has an i5-3570K, 8GB of DDR3, and a GTX670. The 2008 build has trouble handling Crysis at anything higher than 1280x1024 while the 2012 machine could probably hold its own at 2560x1440, a display resolution that's almost three times as big in terms of the sheer number of pixels.

CPU Staying Power

The second article that piqued my interest is one from The Tech Report: "Inside the second: Gaming performance with today's CPUs - Does the processor you choose still matter?" They pair a bunch of different processors from the last 3-4 years with an HD 7950 GPU and examine "frame latency" in different gaming benchmarks; instead of looking at simple fps averages they look at the amount of time it takes to actually render each frame. This supposedly is a better estimate of overall performance as it better identifies slowdowns and problem areas than a "minimum FPS" number. You can convert between frame latency and fps with the following equation:

1000 (ms in 1 second) / latency (ms per 1 frame) = fps (frames per 1 second)In other words, a latency of 8.3ms is 120fps, 16.7ms is the coveted 60fps mark, and 33.3ms is the "unplayable" threshold of 30fps.

For example, here's a graph of the average fps of each CPU in Tech Report's Skyrim benchmark runs:

|

| Image credit: Tech Report |

Looks like each CPU runs the game from late 2011 pretty smoothly. For comparison, here's the "time spent beyond 16.7fps," aka the amount of time the game is chugging along under 60fps:

|

| Image credit: Tech Report |

Now the performance differences between processors are amplified a bit. Still, dipping below 60fps isn't the end of the world - it's the 30fps area that's important. They even take it to one more extreme and play Skyrim while transcoding video in the background with Windows Movie Maker:

|

| Image credit: Tech Report |

What do I take away from these tests? The first is that I'm glad I went with an i5-2500K and didn't wait to see what would happen with AMD's Bulldozer. The second thing is that the older processors like the i5-760 and Phenom II X4 980 and X6 1100T really don't perform too terribly in most of these tests, even when they're in some crazy multitasking scenario. The X4 980 is basically a clock speed bump of Phenom IIs from early 2009, and the X6 1100T is likewise a bump of the 1090T from April of 2010. Similarly, the i5-760 is an iteration of the i5-750 from Q3 2009. These processors are all 2-3 years old, and more importantly all running stock configurations, and each one more or less completes each test with minimal time spent in the 20fps zone. You could conceivably overclock each one by upwards of 20-30% with a $50 investment in a decent heatsink and further increase their staying power. It's obviously foolish to expect an i5-760 to compete with an i5-3570K, but in the end both systems provide playable performance, and that's what matters in the end.

Today's Base Performance Level

I built myself a Core 2 Duo rig in January of 2009, complete with an E8400 3.0GHz, 4GB DDR2-1066, and a Radeon HD 4850 512MB. Most of that was tech from mid-2008. I assembled my current PC in January/February of 2011, including an i5-2500K, 8GB DDR3-1600, and a GTX 560 Ti 1GB. It seems like the two major jumps we've seen in the last few years are the adoption of multi-core CPUs and the increase in screen resolution. With that being said, AMD's strategy of throwing more cores at us hasn't exactly gone as well as they would have hoped and many of the programs we're running still don't make full use of 4/6/8-core systems (games especially).

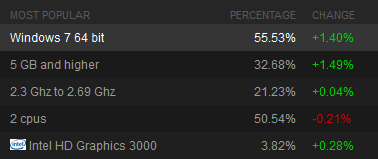

If you look at the Steam Hardware Survey from July 2012, around 50% of people surveyed still use a dual-core system, which trumps quad-core setups by over 10%. In addition, excluding multi-monitor setups, less than 2% of people use a display resolution larger than 1920x1200 - 5% are still stuck on 1024x768 and more than 18% of users run at 1366x768, probably laptop owners. Technology might be advancing at a rapid pace, but the majority of us are still using dual-core systems with 2-4GB of RAM and a GPU with 1GB (or less) VRAM.

|

| Steam Hardware Survey 2012 |

To further drive the point home, look at the relationship between PC games and consoles. Before the current generation of consoles we had the Xbox, PS2, and Gamecube, which debuted in late 2000 and 2001 in North America and effectively hung around for 5-6 years. Prior to that we had the N64, PlayStation, and Sega Saturn in '96, '95, and '95, respectively, another generation in the 5-6 year range. The Xbox360, PS3, and Wii were released in late 2005 and 2006, and the earliest replacement we'll see is the Wii U which will arrive late 2012/early 2013. Sony and Microsoft haven't even announced their plans for followup systems, so the current generation is going to represent a time period of 6-8 years at minimum.

Our painful reality as PC gamers today is that many of the games we get are console games at heart, developed with the PS3 and Xbox360 in mind and ported to the PC with varying degrees of effort. With popular consoles still using tech from 2005-ish it's hard for developers to justify pushing the envelope graphically on the PC. Hopefully things will change with the new wave of consoles, but the Wii U isn't exactly taking a huge leap forward - we'll probably be waiting for the "Xbox720" and PS4 until we see games with heftier system requirements.

What does it all mean?

Bottom line: if you're rocking a quad-core CPU and DDR3 you'll probably be fine with minimal upgrades to sustain you over the next couple of years. Intel users look to be in better shape than their AMD brethren, but AMD processors are generally cheaper and have greater compatibility across generations than the competition. RAM is dirt cheap right now so there's not much reason to be running anything worse than 8GB of DDR3-1600. Video cards might be the main thing to keep an eye on; they can be easily swapped out (or added for SLI or Crossfire) every year or two, assuming your power supply is beefy enough. We're not even close to saturating PCI-E 2.0, so jumping to PCI-E 3.0 isn't going to be required for a while.

With things like DDR4 and refinements to Intel's tri-gate transistor tech on the horizon I'm sure we'll see sizable jumps in performance in the coming months. Unless you're an enthusiast with cash to burn, though, your current setup might last you longer than you think.

0 comments:

Post a Comment